What is it?

is an open-source software testing framework that can be used for functional,

integration, acceptance and unit testing across various teams. It is designed to provide

complete control of how tests are written and executed by allowing to write tests and

define test flow explicitly as Python code. It uses everything is a test approach

with the focus on giving test authors flexibility in writing and running their tests.

It’s designed to meet the needs of small QA groups at software startup companies

while providing the tools to meet the formalities of the large enterprise QA groups

producing professional test process documentation that includes detailed test and

software requirements specifications as well as requirements coverage, official test

and metrics reports. Designed for large scale test analytics processing using

ClickHouse and Grafana and built on top of a messaging protocol to allow

writing advanced parallel tests that require test-to-test communication

and could be executed in a hive mode on multi-node clusters.

is an open-source software testing framework that can be used for functional,

integration, acceptance and unit testing across various teams. It is designed to provide

complete control of how tests are written and executed by allowing to write tests and

define test flow explicitly as Python code. It uses everything is a test approach

with the focus on giving test authors flexibility in writing and running their tests.

It’s designed to meet the needs of small QA groups at software startup companies

while providing the tools to meet the formalities of the large enterprise QA groups

producing professional test process documentation that includes detailed test and

software requirements specifications as well as requirements coverage, official test

and metrics reports. Designed for large scale test analytics processing using

ClickHouse and Grafana and built on top of a messaging protocol to allow

writing advanced parallel tests that require test-to-test communication

and could be executed in a hive mode on multi-node clusters.

Differentiating Features

has the following differentiating features that makes

it stand out from a plenty of other open and closed source test frameworks.

has the following differentiating features that makes

it stand out from a plenty of other open and closed source test frameworks.

The framework has many advanced features but it allows you to use only the pieces that you need. For example, if you don’t want to use requirements you don’t have to or if you don’t want to break your tests into steps or using behavior driven step keywords that is perfectly fine. At the heart, it is just a collection of Python modules so you are always in control and you are not forced to use anything that you don’t need.

An enterprise quality assurance process must always revolve around requirements. However, requirements are most often ignored in software development groups even at large companies. The framework is designed to break that trend and allows to write and work with requirements just like you work with code. However, if you are not ready to use requirements then you don’t have to.

Whether you realize it or not the only true purpose of writing any test is to verify one or more requirements and it does not really matter if you have clearly identified these requirements or not. Tests verify requirements and each requirement must be verified by either fully automated, semi-automated or manual test. If you don’t have any tests to verify some requirement then you can’t be sure that requirement is met or that the next version of your software does not break it.

With  , you don’t have to wait for your company’s culture to change in relation

to handling and managing requirements. You are able

to write and manage requirements yourself, just like code. Requirements are simply

written in a Markdown document, where each requirement has a unique identifier and version.

These documents are the source of the requirements that you can convert to Python requirement objects,

which you can easily link with your tests. To match the complexities of real world requirement verification,

, you don’t have to wait for your company’s culture to change in relation

to handling and managing requirements. You are able

to write and manage requirements yourself, just like code. Requirements are simply

written in a Markdown document, where each requirement has a unique identifier and version.

These documents are the source of the requirements that you can convert to Python requirement objects,

which you can easily link with your tests. To match the complexities of real world requirement verification,

allows one-to-one, one-to-many, many-to-one, or many-to-many test-to-requirement

relationships.

allows one-to-one, one-to-many, many-to-one, or many-to-many test-to-requirement

relationships.

Write Python test programs and not just tests. A test program can execute any number of tests. This provides the unrivalled flexibility to meet the needs of any project. Tests are not decoupled from test flow where the flow defines a precise order of how tests are executed. However, you can write many kinds of test runners using the framework if you need them. For example, you can write test programs that read test cases from databases, API endpoints, or file systems and trigger your test cases based on any condition. By writing a test program you are in total control of how you want to structure and approach the testing of a given project.

Through its flexibility,  helps to avoid test tool fragmentation

where each project in a company eventually starts to use their own test framework

and nobody knows how to run tests written by other groups and reporting accross

groups become inconsistent and difficult to follow.

helps to avoid test tool fragmentation

where each project in a company eventually starts to use their own test framework

and nobody knows how to run tests written by other groups and reporting accross

groups become inconsistent and difficult to follow.

Provides tools for test authors to break tests into test Steps and use behavior driven step keywords such as Given, When, Then and others to make tests and test procedures pleasantly readable. Breaking tests into steps brings an advantage of test code becoming self-documenting, it provides an easy way to auto-generate formal documentation such as a test specification without doing any extra work, produces detailed test logs and facilitates test failure debugging.

Test steps can also be made reusable, allowing test authors to create reusable steps modules that greatly simplify writing new test scenarios. Just like you write regular programs by using function calls you can modularize tests by using reusable test steps. Using reusable steps produces clean test code and greatly improves readability and maintainability of tests.

If your test process or your manager requires you to produce formal test specifications that must describe the procedure of each test, then you can easily auto-generate them.

Writing asynchronous tests is as easy as writing regular tests. The framework even allows you to run asynchronous and synchronous test code in the same test program.

Testing real-world applications is usually not done only with fully automated test scenarios. Most often, verification requires a mix of automated, semi-automated, and manual tests.

The framework allows you to unify your testing and provides uniform test reporting no matter what type of tests you need for your project by natively supporting the authoring of automated, semi-automated, and manual tests.

Native support for authoring parallel tests and executing them in parallel, with fine-grain control over what and where runs in parallel. Asynchronous tests are also supported and allow for thousands of concurrent tests to be run at the same time. Mixing parallel and asynchronous tests is also supported.

Combinatorial tests are supported by allowing you to define tests and steps that can take arguments, as well as allowing to easily and naturally define tests that check different combinations using TestSketches without writing any nested for-loops or calculating combinations beforehand.

In addition, a convenient collection of tools used for combinatorial testing is provided including calculation of Covering Arrays for pairwise and n-wise testing using the IPOG algorithm.

It uses everything-is-a-test approach that allows unified treatment of any code that is executed during testing. There is no second class test code. If test fails during setup, teardown or execution of one of its actions, the failure is handled identically. This avoids mixing analysis of why the test failed with test execution and results in a clean and uniform approach to testing.

It is built on top of a messaging protocol. This brings many benefits, including the ability to transform test output and logs into a variety of different formats as well as enable advanced parallel testing.

Test logs were designed to be easily stored in ClickHouse. Given that testing produces huge amounts of data, this integration brings test data analytics right to your fingertips.

Standard Grafana dashboards are available to visualize your test data stored in ClickHouse. Additional dashboards can be easily created in Grafana to highlight test results that are the most important for your project.

Avoids unnecessary abstraction layers, such as when test runners are decoupled from tests or the usage of behavior driven (BDD) keywords is always tied to Gherkin specifications. These abstractions, while providing some benefit, in most cases lead to more problems than solutions when applied to real-world projects.

Using Handbook

This handbook is a one-page document that you can search using standard

browser search (Ctrl-F).

For ease of navigation, you can always click any heading to go back to the table of contents.

✋ Try clicking

Using Handbookheading and you will see that the page will scroll up and the corresponding entry in the table of contents will be highlighted in red. This handy feature will make sure you are never lost!

There is also icon on the bottom right of the page to allow you to quickly scroll to the top.

Also, feel free to click on any internal or external references, as you can use your browser’s ⇦ back button to return to where you were.

✋ Try clicking Using Handbook link and then browser’s use ⇦ back button to return to the same scroll position in the handbook.

If you find any errors or would like to add documentation for something that is still not documented, then submit a pull request with your changes to handbook source file.

Supported Environment

✋ Known to run on other systems such as MacOS.

Installation

You can install the framework using pip3

1 | pip3 install testflows |

or from sources

1 | git clone https://github.com/testflows/TestFlows.git |

Upgrading

If you already have  installed, you can upgrade it to the latest version

using the

installed, you can upgrade it to the latest version

using the --upgrade option when executing pip3 install command.

1 | pip3 install --upgrade testflows |

Hello World

You can write an inline test scenario in just three lines.

1 | from testflows.core import Scenario |

and simply run it using python3 command.

1 | python3 ./test.py |

1 | Jun 28,2020 14:47:02 ⟥ Scenario Hello World! |

Defining Tests

You can define tests inline using the classical Step, Test, Suite, and Module test definition classes or using specialized keyword classes as Scenario, Feature, Module and the steps such as Background, Given, When, Then, But, By, And, and Finally.

In addition, you can also define sub-tests using Check test definition class or its flavours Critical, Major or Minor.

✋ You are encouraged to use the specialized keyword classes to greatly improve the readability of your tests and test procedures.

Given the variety of test definition classes above, fundamentally,

there are only four core Types of tests in  .

The core Types are

.

The core Types are

and all other types are just a naming variation of one of the above with the following mapping or are special types

- Module

- Suite

- Test

- Step

- Sketch (special)

- Combination (special)

- Outline (special)

- Iteration (special)

- RetryIteration (special)

see Types for more information.

Inline

Inline tests can be defined anywhere in your test program by using Test Definition Classes above. Because all test definition classes are context managers, therefore they must be used using the with statement or async with for asynchronous tests that leverage Python‘s asyncio module.

1 | with Module("My test module"): |

Decorated

For re-usability, you can also define tests using the TestStep, TestBackground, TestCase, TestCheck, TestCritical, TestMajor, TestMinor, TestSuite, TestFeature, TestModule, TestOutline, and TestSketch test function decorators.

For example,

1 |

|

Similarly to how class method‘s take an instance of the object as the first argument,

test functions wrapped with test decorators take an instance of the current test as the first argument

and therefore, by convention, the first argument is always named self.

Calling Decorated Tests

✋ All arguments to tests must be passed using keyword arguments.

For example,

1 | scenario(action="driving") |

Use a test definition class to run another test as

1 | Scenario(test=scenario)(action="running") |

where the test is passed as the argument to the test parameter.

If the test does not need any arguments, use a short form by passing

the test as the value of the run parameter.

1 | Scenario(run=scenario) |

✋ Use the short form only when you don’t need to pass any arguments to the test.

This will be equivalent to

1 | Scenario(test=scenario)() |

You can also call decorated tests directly as

1 | scenario(action="swimming") |

Note that scenario() call will only create its own Scenario if and only if it is

running within a parent that has a higher test Type such as Feature or Module.

However, if you call it within the same test Type then it will not create its own Scenario but will run simply as a function within the scope of the current test.

For example,

1 | with Scenario("My scenario"): |

will run in the scope of My scenario where self will be an instance of the

1 | Scenario("My scenario") |

but

1 | with Feature("My feature"): |

will create its own test.

Running Tests

Top level tests can be run using either python3 command or directly if they are made executable.

For example, with a top level test defined as

1 | from testflows.core import Test |

you can run it with python3 command as follows

1 | python3 test.py |

or we can make the top level test executable and defined as

1 | #!/usr/bin/python3 |

and then we can make it executable with

1 | chmod +x test.py |

allowing us to execute it directly as follows.

1 | ./test.py |

Writing Tests

With  you actually write test programs, not just tests. This means

that the Python source file that contains Top Level Test can be run directly if

it is made executable and has

you actually write test programs, not just tests. This means

that the Python source file that contains Top Level Test can be run directly if

it is made executable and has #!/usr/bin/env python3

or using python3 command.

✋ Note thatonly allows one top level test in your test program.

See Top Level Test.

Writing tests is actually very easy, given that you are in full control of your test program. You can either define inline tests anywhere in your test program code or define them separately as test decorated functions.

An inline test is defined using the with statement and one of the Test Definition Classes. The choice of which test definition class you should use depends only on your preference. See Defining Tests.

The example from the Hello World shows an example of how an inline test can be easily defined.

1 | #!/usr/bin/env python3 |

The same test can be defined using the TestScenario decorated function. See Decorated Tests.

1 | #!/usr/bin/env python3 |

✋ Note that if the code inside the test does not raise any exceptions and does notexplicitly set test result it is considered as passing and will have OK result.

In the above example, the Hello World is the Top Level Test and the only test

in the test program.

✋ Note that instead of just having

passyou could add any code you want.

The Hello World test will pass if no exception is raised in the

with block otherwise it will have a Fail or Error result. Fail result is set

if code raises AssertionError any other exceptions will result in Error.

Let’s add a failing assert to Hello World test.

1 | from testflows.core import Scenario |

The result will be as follows.

1 | python3 hello_world.py |

1 | Nov 03,2021 17:09:17 ⟥ Scenario Hello World! |

Now, let’s raise some other exception like RuntimeError to see Error result.

1 | from testflows.core import Scenario |

1 | python3 hello_world.py |

1 | Nov 03,2021 17:14:10 ⟥ Scenario Hello World! |

Flexibility in Writing Tests

provides unmatched flexibility in how you can author your tests, and

provides unmatched flexibility in how you can author your tests, and

this is what makes it adaptable to your testing projects at hand.

Let’s look at an example of how to test the functionality

of a simple add(a, b) function.

✋ Note that this is just a toy example used for demonstration purposes only.

1 | from testflows.core import * |

Now you can put the code above anywhere you want. Let’s move it into a function. For example,

1 | from testflows.core import * |

We can also decide that we don’t want to use Feature and Scenario in this case but you’d like to use Scenario that has multiple Examples with test steps such as When and Then.

1 | from testflows.core import * |

The test code seems to be redundant, so we could move the When and Then steps into

a function check_add(a, b, expected) that can be called with different parameters.

1 | from testflows.core import * |

We could actually define all examples we want to check up-front and generate Example steps on the fly depending on how many examples we want to check.

1 | from testflows.core import * |

We could modify the above code and use Examples instead of our custom list of tuples.

1 | from testflows.core import * |

Another option is to switch to using decorated tests. See Decorated Tests.

Let’s move inline Scenario into a decorated TestScenario function with Examples and create Examples for each example that we have.

1 | from testflows.core import * |

We could also get rid of the explicit for loop over examples by using Outline with Examples.

1 | from testflows.core import * |

The Outline with Examples turns out to be the exact fit for the problem.

However, there are many cases where you would want to have choice, and  provides the flexibility you need to author your tests in the way that fits best for you.

provides the flexibility you need to author your tests in the way that fits best for you.

Using Test Steps

When writing tests, it is best practice to break the test procedure

into individual test Steps. While using  you can write

tests without explicitly defining Steps it is not recommended.

you can write

tests without explicitly defining Steps it is not recommended.

Breaking tests into steps has the following advantages:

- improves code structure

- results in a self documented test code

- significantly improves test failure debugging

- enables auto generation of test specifications

Structuring Code

Using test Steps helps structure test code. Any test inherently implements a

test procedure, and the procedure is usually described by a set of steps.

Therefore, it is natural to structure tests in the form of a series of individual

Steps. In  test Steps are defined and used just like Tests

or Scenarios as Steps also have results just like Tests.

test Steps are defined and used just like Tests

or Scenarios as Steps also have results just like Tests.

Test Steps can either be defined inline or using TestStep function decorator, with the combination of both being the most common.

For example, the following code clearly shows that by identifying steps such as setup, action, and assertion, the structure of test code is improved.

1 | from testflows.core import * |

In many cases, steps themselves can be reused between many different tests. In this case, defining steps as decorated functions helps to make them reusable.

For example,

1 | from testflows.core import * |

The Steps above just like Tests can be called directly (not recommended) as follows:

1 |

|

The best practice, however, is to wrap calls to decorated test steps with inline

Steps which allows you to clearly give each Step a proper name in the context

of the specific test scenario as well as allows to specify a detailed description

when necessary.

For example,

1 |

|

✋ Note that because decorated test steps are being called within a Step these calls are similar to just calling a function, which is another advantage of wrapping calls with inline steps. This means that return value from the decorated test step can be received just like from a function:

2

3

4

5

6

7

8

9

def do_something(self):

return "hello there"

def my_scenario(self):

with When("I do something",

description="""detailed description if needed"""):

value = do_something() # value will be set to "hello there"

Self Documenting Test Code

Using test Steps results in self documented test code. Take another look at this example.

1 |

|

It is clear to see that explicitly defined Given, When, and Then steps

when given proper names and descriptions make reading test code

a pleasant experience as the test author has a way to clearly communicate

the test procedure to the reader.

The result of using test Steps is a clear, readable, and highly maintainable

test code. Given that each Step produces corresponding messages in the test output, it forces

test maintainers to ensure Step names and descriptions are

maintained accurate over the lifetime of the test.

Improved Debugging of Test Fails

Using test Steps helps with debugging test fails as you can clearly see at which Step of the test procedure the test has failed. Combined with the clearly identified test procedure it becomes much easier to debug any test fails.

For example,

1 | from testflows.core import * |

Running the test program above results in the following output using the default nice

format.

1 | Nov 12,2021 10:56:17 ⟥ Scenario my scenario |

If we introduce a fail in the When step, we can see that it will be easy to see at which point in the test procedure the test is failing.

1 |

|

1 | Nov 12,2021 10:58:02 ⟥ Scenario my scenario |

✋ Note that the failing test result always

bubbles upall the way to the Top Level Test and therefore it might seem that the output is redundant. However, this allows for the failure to be examined just by looking at the result of the Top Level Test.

Auto Generation of Test Specifications

When tests are broken up into Steps generating test specifications is very easy.

For example,

1 | from testflows.core import * |

when executed with short output format highlights the test procedure.

1 | Scenario my scenario |

If you save the test log using --log test.log option, then you can also use tfs show procedure command to

extract the procedure of a given test within a test program run.

1 | cat test.log | tfs show procedure "/my scenario" |

1 | Scenario my scenario |

Full test specification for a given test program run can be obtained

using tfs report specification command.

1 | cat test.log | tfs report specification | tfs document convert > specification.html |

Test Flow Control

The control of the Flow of tests allows you to precisely

define the order of test execution.  allows you

to write complete test programs, and therefore the order of executed tests

is defined in your Python test program code explicitly.

allows you

to write complete test programs, and therefore the order of executed tests

is defined in your Python test program code explicitly.

For example, the following test program defines decorated tests

testA, testB, and testC which are executed in the regression() module

in the testA -> testB -> testC order.

1 | from testflows.core import * |

It is trivial to see that given that the order or test execution (Flow) is explicitely

defined in regression() we could easily change it from testA -> testB -> testC to

testC -> testA -> testB.

1 |

|

Conditional Test Execution

Conditional execution can be added to any explictely defined test Flow using standard Python Flow Control Tools using if, while, and for statements.

For example,

1 |

|

will execute testA and only proceed to run other tests if its result is not Fail otherwise

only testA will be executed. If result of testA is not Fail then

we run testB 3 times, and testC gets executed indefinitely until its result is OK.

Creating Automatic Flows

When precise control over test Flow is not necessary, you can easily define a list of tests to be executed in any way you might see fit, including using a simple list.

For example,

1 | # list of all tests |

For such simple cases, you can also use loads() function. See Using loads().

The loads() function allows you to create a list of tests of the specified type from either the current or some other module.

For example,

1 |

|

Here is an example of loading tests from my_project/tests.py module,

1 |

|

The list of tests can be randomized or ordered, for example, using ordered() function or Python‘s sorted function.

✋ You could also write Python code to load your list of tests from any other source such as a file system, database, or API endpoint, etc.

Setting Test Results Explicitly

A result of any test can be set explicitly using the following result functions:

- fail() function for Fail

- err() function for Error

- skip() function for Skip

- null() function for Null

- ok() function for OK

- xfail() function for XFail

- xerr() function for XError

- xnull() function for XNull

- xok() function for XOK

Here are the arguments that each result function can take. All arguments are optional.

messageis used to set an optional result messagereasonis used to set an optional reason for the result. Usually, it is only set for crossed out results such as XFail, XError, XNull and XOK to indicate the reason for the result being crossed out such as a link to an opened issuetestargument is usually not passed, as it is set to the current test by default. See current() function.

1 | ok(message=None, reason=None, test=None) |

These functions raise an exception that corresponds to the appropriate result class and therefore, unless you explicitly catch the exception, the test stops at the point at which the result function is called.

For example,

1 | from testflows.core import * |

You can also raise the result explicitly.

For example,

1 | from testflows.core import * |

Fails of Specific Types

does not support adding types to the Fails but

the fail() function takes an optional

does not support adding types to the Fails but

the fail() function takes an optional type argument that takes

one of the Test Definition Classes which will be used to create a sub-test

with the name specified by the message and failed with the specified

reason.

The original use case is to provide a way to separate fails of Checks into Critical, Major and Minor without explicitly defining Critical, Major, or Minor sub-tests.

For example,

1 | from testflows.core import * |

The above code is equivalent to the following.

1 | from testflows.core import * |

Working With Requirements

Requirements must be at the core of any enterprise QA process. There exist numerous proprietary and complex

systems for handling requirements. This complexity is usually not necessary,

and  provides a way to work with requirements just like with code

and leverage the same development tools to enable easy linking of requirements to your tests.

provides a way to work with requirements just like with code

and leverage the same development tools to enable easy linking of requirements to your tests.

In general, when writing requirements, you should think about how they will be tested. Requirements can either be high level or low level. High level requirements are usually verified by Features or Modules and low level requirements by individual Tests or Scenarios.

Writing untestable requirements is not very useful. Keep this in mind during your software testing process.

When writing requirements, you should be thinking about tests or test suites that would verify them, and when writing tests or test suites, you should think about which requirements they will verify.

The ideal requirement to test a relationship is one-to-one. Where one requirement

is verified by one test. However, in practice, the relationship can be

one-to-many, many-to-one, and many-to-many, and  supports

all of these cases.

supports

all of these cases.

In many cases, don’t be afraid to modify and restructure your requirements once you start writing tests. It is natural to refactor requirements during test development process that helps better align requirements to tests and vice versa.

Writing requirements is hard, but developing enterprise software without requirements is even harder.

Requirement Documents

Working with requirements just like with code is very convenient, but it does not necessarily mean that we need to write requirements as Python code.

Requirements form documents such as SRS (Software Requirements Specification) where, in addition to the requirements, you might find additional sections such as introductions, diagrams, references, etc. Therefore, the most convenient way to define requirements is inside a document.

allows you to write requirements as a Markdown

document that serves as the source of all the requirements. The document is the source

and is stored just like code in the source control repository such as Git.

This allows the same process to be applied to requirements as to the code.

For example, you can use the same review process and the same tools. You also receive full

traceability of when and who defined the requirement and keep track of any changes

just like for your other source files.

allows you to write requirements as a Markdown

document that serves as the source of all the requirements. The document is the source

and is stored just like code in the source control repository such as Git.

This allows the same process to be applied to requirements as to the code.

For example, you can use the same review process and the same tools. You also receive full

traceability of when and who defined the requirement and keep track of any changes

just like for your other source files.

For example, a simple requirements document in Markdown can be defined as follows.

requirements.md

2

3

4

5

6

7

8

9

10

11

12

13

14

# Software Requirements Specification

## Introduction

This software requirements specification covers the behavior of the standard

Unix `ls` utility.

## Requirements

### RQ.SRS001-CU.LS

version: 1.0

The [ls](#ls) utility SHALL list the contents of a directory.

The above document serves as the source of all the requirements and can be

used to generate corresponding Requirement class objects that can be linked with tests

using tfs requirements generate command. See Generating Requirements Objects.

Each requirement is defined as a heading that starts with RQ. prefix and contains

attributes such as version, priority, group, type and uid defined

on the following line, which must be followed by an empty line.

1 | ### RQ.SRS001-CU.LS |

Only the version attribute is always required, and the others are optional.

The version attribute allows for tracking material changes to the requirement over

lifetime of the product and makes sure the tests get updated when a requirement has been

updated to a new version.

Any text found before the next section is considered to be the description of the requirement.

1 | ### RQ.SRS001-CU.LS |

Here is an example where multiple requirements are defined.

1 | ### RQ.SRS001-CU.LS |

✋ Except for the basic format to define the requirements described above, you can structure and organize the document in any way that is the most appropriate for your case.

Each requirement must be given a unique name. The most common convention

is to start with the SRS number as a prefix, followed by a dot separated

name. The . separator serves to implicitly group the requirements.

It is usually best to align these groups with the corresponding document sections.

For example, we can create Options section where we would add requirements

for the supported options. Then all the requirements in this section would have

RQ.SRS001-CU.LS.Options. prefix.

1 | ### Options |

✋ Names are usually preferred over numbers to facilitate the movement of requirements between different parts of the document.

Generating Requirement Objects

Requirement class objects can be auto generated from the Markdown requirements source files

using tfs requirements generate command.

1 | tfs requirements generate -h |

1 | usage: tfs requirements generate [-h] [input] [output] |

For example, given requirements.md file having the following content.

requirements.md

2

3

4

5

6

7

8

9

10

11

12

13

14

# Software Requirements Specification

## Introduction

This software requirements specification covers the behavior of the standard

Unix `ls` utility.

## Requirements

### RQ.SRS001-CU.LS

version: 1.0

The [ls](#ls) utility SHALL list the contents of a directory.

You can generate requirements.py file from it using the following command.

1 | cat srs.md | tfs requirements generate > requirements.py |

The requirements.py will have the following content.

1 | # These requirements were auto generated |

For each requirement, a corresponding Requirement class object will be defined in addition to the Specification class object that describes the full requirements specification document.

1 | SRS001_ls_Unix_Command_Utility = Specification( |

The objects defined in the requirements.py can now be imported into test

source files and used to link with tests.

Linking Requirements

Once you have written your requirements in a Markdown document as described in Requirements As Code

and have generated Requirement class objects from the requirements source file using tfs requirements generate

command as described in Generating Requirements Objects

you can link the requirements to any of the tests by either setting

requirements attribute of the inline test or using Requirements

decorator for decorated tests.

For example,

1 | from requirements import RQ_SRS001_CU_LS |

The requirements argument takes a list of requirements, so you can link

any number of requirements to a single test.

Instead of passing a list, you can also pass Requirements object directly as follows,

1 | from requirements import RQ_SRS001_CU_LS |

where Requirements can be passed one or more requirements.

✋ Note that when linking requirements to test you should always call the requirement with the version that the test is verifying. If the version does not match the actual requirement version

RequirementErrorexception will be raised. See Test Requirements.

For decorated tests, Requirements class can also act as a decorator.

For example,

1 | from requirements import RQ_SRS001_CU_LS |

Linking Specifications

When generating requirements, in addition to the Requirement class objects created for each requirement, a Specification class object is also generated that describes the whole requirements specification document. This object can be linked to higher level tests so that a coverage report can be easily calculated for a specific test program run.

To link Specification class object to a test, either use specifications parameter for inline tests or Specifications decorator for decorated tests.

✋ Specifications are usually linked to higher level tests such as Feature, Suite, or Module.

For example,

1 | from requirements import SRS001_ls_Unix_Command_Utility |

One or more specifications can be linked.

Instead of passing a list, you can also pass Specifications object directly as follows,

1 | from requirements import SRS001_ls_Unix_Command_Utility |

✋ Note that Specification class object call also be called with a specific version, just like Requirement class objects.

If a higher level test is defined using a decorated function, then you can use Specifications decorator.

For example,

1 | from requirements import SRS001_ls_Unix_Command_Utility |

Attributes of Decorated Tests

You can set attributes for decorated tests using different decorator classes

such as Flags class to set test flags, Name class to set test name, Examples class

to set examples etc.

For example,

1 |

|

When creating a test based on a decorated test, the attributes of the test get preserved unless you override them explicitly.

For example,

1 | # execute `test()` using a Scenario that will have |

However, if you call a decorated test within a test of the same type, then the attributes of the parent test are not changed in any way as the test is executed just like a function.

1 | with Scenario("my test"): |

Overriding Attributes

You can override any attributes of a decorated test by explicitly creating a test

that uses it as a base using the test parameter, or the run parameter if there is no need to pass

any arguments to the test, and define any new values of the attributes as needed.

For example, we can override the name and flags attributes of a decorated

test() while not modifying examples or any other attributes as follows:

1 | Scenario(name="my new name", flags=PAUSE_BEFORE, test=test)(x=1, y=1, result=2) |

✋ The

testparameter sets the decorated test to be the base for the explicitly definedScenario.

If we also want to set custom examples, you could do it as follows:

1 | Scenario(name="my new name", flags=PAUSE_BEFORE, |

Similarly, any other attribute of the scenario can be set. If the same attribute is set already for the decorated test then the value is overwritten.

Modifying Attributes

If you don’t want to completely override the attributes of the decorated test then you need to explicitly modify them by accessing the original values of the decorated test.

Any set attributes of the decorated test can be accessed as the attribute of the decorated test object. For example,

1 | from testflows.core import * |

Use standard getattr() function to check if a particular attribute is set and if not then use the default value.

For example,

1 | Scenario("my new test", flags=Flags(getattr(test, "flags", None)) | PAUSE_BEFORE, test=test)(x=1, y=1, result=2) |

adds PAUSE_BEFORE flag to the initial flags of the decorated test.

✋ Note that you don’t want to modify the original attributes but instead you should always create a new object based on the initial attribute value.

Here is an example of how to add another example to existing examples

1 | Scenario("my new test", examples=Examples( |

Top Level Test

only allows one top level test to exist in any given test program execution.

only allows one top level test to exist in any given test program execution.

Because a Flow of tests can be represented as a rooted Tree, a test program exits on completion of the top level test. Therefore, any code that is defined after the top level test will not be executed.

1 | with Module("module"): |

✋ Top level test can’t be an asyncrounous test. See Async Tests.

Renaming Top Test

Top level test name can be changed using the –name command line argument.

1 | --name name test run name |

✋ Changing name of the top level test is usually not recommended as you can break any test name patterns that are not relative. For example, this can affect xfails, ffails, etc.

For example,

test.py

2

3

4

5

with Module("regression"):

with Scenario("my test"):

pass

1 | python3 test.py --name "new top level test name" |

1 | Sep 25,2021 8:55:18 ⟥ Module new top level test name |

Adding Tags to Top Test

On the command line, tags can be added to Top Level Test using –tag option. One or more tags can be specified.

1 | --tag value [value ...] test run tags |

For example,

test.py

2

3

4

5

with Module("regression"):

with Scenario("my test"):

pass

1 | python3 test.py --tag tag1 tag2 |

1 | Sep 25,2021 8:56:58 ⟥ Module regression |

Adding Attributes to Top Test

Attributes of the Top Level Test can be used to associate important information with your test run. For example, common attributes include tester name, build number, CI/CD job id, artifacts URL and many others.

✋ These attributes can be used extensively when filtering test runs in test results database.

On the command line, attributes can be added to Top Level Test using –attr option. One or more attributes can be specified.

1 | --attr name=value [name=value ...] test run attributes |

For example,

test.py

2

3

4

5

with Module("regression"):

with Scenario("my test"):

pass

1 | python3 top_name.py --attr build=21.10.1 tester="Vitaliy Zakaznikov" job_id=4325432 job_url="https://jobs.server.com/4325432" |

1 | Sep 25,2021 9:04:11 ⟥ Module regression |

Custom Top Test Id

By default Top Level Test test id is generated automatically using [UUIDv1]. However, if needed, you can specify custom id value using –id test program option.

✋ Specifying Top Level Test id should only be done by advanced users as each test run must have a unique id.

In general, the most common use case when you need to specify custom –id

is when you need to know Top Level Test id before running your test program.

Therefore, you would generate [UUIDv1] externaly using for example uuid utility

1 | uuid |

1 | 52da6a26-1e54-11ec-9d7b-cf20ccc24475 |

and passing the generated value to your test program.

For example, give the following test program

test.py

2

3

4

with Test("my test"):

pass

if it is executed without –id you can check top level test id by looking at raw output

messages and looking at test_id field.

1 | python3 id.py -o raw |

Now if you specify –id then you will see that test_id field of each message

will contain the new id.

1 | python3 id.py -o raw --id 112233445566 |

1 | {"message_keyword":"PROTOCOL",...,"test_id":"/112233445566",...} |

Test Program Tree

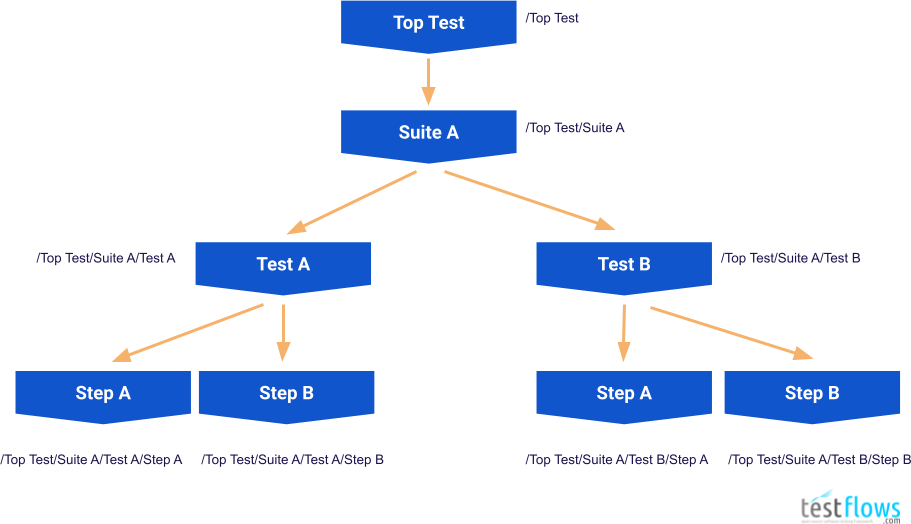

Executing any  test program results in a Tree. Below is a

diagram that depicts a simple test program execution Tree.

test program results in a Tree. Below is a

diagram that depicts a simple test program execution Tree.

🔎 Test Program Tree

During test program execution, when all tests are executed sequentially, the Tree is traversed in a depth first order.

The order of execution of tests shown is the diagram above is as follows

- /Top Test

- /Top Test/Suite A

- /Top Test/Suite A/Test A/

- /Top Test/Suite A/Test A/Step A

- /Top Test/Suite A/Test A/Step B

- /Top Test/Suite A/Test B/

- /Top Test/Suite A/Test B/Step A

- /Top Test/Suite A/Test B/Step B

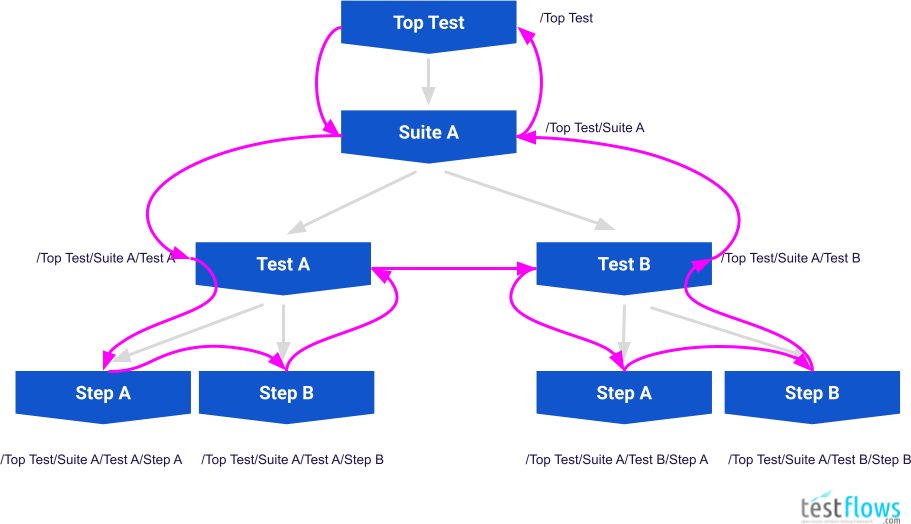

and this order of execution forms the Flow of the test program. This Flow can also be shown graphically as in the diagram below where depth first order of execution is highlighted by the magenta colored arrows.

🔎 Test Program Tree Traversal (sequential)

When dealing with test names when Filtering Tests it is best

to keep the diagram above in mind to help visualize and understand how  works.

works.

Logs

The framework produces LZMA compressed logs that contains JSON encoded messages. For example,

1 | {"message_keyword":"TEST","message_hash":"ccd1ad1f","message_object":1,"message_num":2,"message_stream":null,"message_level":1,"message_time":1593887847.045375,"message_rtime":0.001051,"test_type":"Test","test_subtype":null,"test_id":"/68b96288-be25-11ea-8e14-2477034de0ec","test_name":"/My test","test_flags":0,"test_cflags":0,"test_level":1,"test_uid":null,"test_description":null} |

Each message is a JSON object. Object fields depend on the type of message that is specified by the message_keyword.

Logs can be decompressed using either the standard xzcat utility

1 | xzcat test.log |

or tfs transform decompress command

1 | cat test.log | tfs transform decompress |

Saving Log File

Test log can be saved into a file by specifying -l or --log option when running the test. For example,

1 | python3 test.py --log test.log |

Transforming Logs

Test logs can be transformed using tfs transform command. See tfs transform --help

for a detailed list of available transformations.

nice

The tfs transform nice command can be used to transform test log into a nice output format which the default output

used for the stdout.

For example,

1 | cat test.log | tfs transform nice |

1 | Jul 04,2020 19:20:21 ⟥ Module filters |

short

The tfs transform short command can be used to transform test log into a short output format that contains test procedures

and test results.

For example,

1 | cat test.log | tfs transform short |

1 | Module filters |

slick

The tfs transform slick command can be used to transform test log into a slick output format that contains only test names

with results provided as icons in front of the test name. This output format is very concise.

For example,

1 | cat test.log | tfs transform slick |

1 | ➤ Module filters |

dots

The tfs transform dots command can be used to transform test log into a dots output format, which outputs dots

for each executed test.

For example,

1 | cat test.log | tfs transform dots |

1 | ......................... |

raw

The tfs transform raw command can be used to transform a test log into a raw output format that contains raw JSON

messages.

For example,

1 | cat test.log | tfs transform raw |

1 | {"message_keyword":"PROTOCOL","message_hash":"489eeba5","message_object":0,"message_num":0,"message_stream":null,"message_level":1,"message_time":1593904821.784232,"message_rtime":0.001027,"test_type":"Module","test_subtype":null,"test_id":"/ee772b86-be4c-11ea-8e14-2477034de0ec","test_name":"/filters","test_flags":0,"test_cflags":0,"test_level":1,"protocol_version":"TFSPv2.1"} |

compact

The tfs transform compact command can be used to transform a test log into a compact format that only contains

raw JSON test definition and result messages while omitting all messages for the steps.

It is used to create compact test logs used for comparison reports.

compress

The tfs transform compress command is used to compress a test log with LZMA compression algorithm.

decompress

The tfs transform decompress command is used to decompress a test log compressed with LZMA compression algorithm.

Creating Reports

Test logs can be used to create reports using tfs report command. See tfs report --help for a list of available reports.

Results Report

A results report can be generated from a test log using tfs report results command.

The report can be generated in either Markdown format (default) or JSON format

by specifying --format json option.

The report in Markdown can be converted to HTML using tfs document convert command.

1 | Generate results report. |

For example,

1 | cat test.log | tfs report results | tfs document convert > report.html |

Coverage Report

Requirements coverage report can be generated from a test log using tfs report coverage command. The report is created in Markdown

and can be converted to HTML using tfs document convert command. For example,

1 | Generate requirements coverage report. |

For example,

1 | cat test.log | tfs report coverage requirements.py | tfs document convert > coverage.html |

Metrics Report

You can generate metrics report using tfs report metrics command.

1 | Generate metrics report. |

Comparison Reports

A comparison report can be generated using one of the tfs report compare commands.

1 | Generate comparison report between runs. |

Compare Results

A results comparison report can be generated using tfs report compare results command.

1 | Generate results comparison report. |

Compare Metrics

A metrics comparison report can be generated using tfs report compare metrics command.

1 | Generate metrics comparison report. |

Specification Report

A test specification for the test run can be generated using tfs report specification command.

1 | Generate specifiction report. |

Test Results

Any given test will have one of the following results.

OK

Test has passed.

Fail

Test has failed.

Error

Test produced an error.

Null

Test result was not set.

Skip

Test was skipped.

XOK

OK result was crossed out. Result is considered as passing.

XFail

Fail result was crossed out. Result is considered as passing.

XError

Error result was crossed out. Result is considered as passing.

XNull

Null result was crossed out. Result is considred as passing.

Test Parameters

Test parameters can be used to set attributes of a test. Here is a list of most common parameters for a test:

✋ Test parameters are used to set attributes of a test. Not to be confused with the attributes which is just one of the attributes of the test (object), that can be specified using the

attributesparameter when calling or creating a test.

- name

- flags

- uid

- tags

- attributes

- requirements

- examples

- description

- xargs

- xfails

- xflags

- ffails

- repeats

- retries

- timeouts

- only

- skip

- start

- end

- only_tags

- skip_tags

- random

- limit

- args

✋ Most parameter names match the names of the attributes of the test which they set. For example, name parameter sets the

nameattribute of the test.

✋ Note that the Args decorator can be used to set values of any parameter of the test. However, many parameters have corresponding dedicated decorators available to be used instead.

When test is defined inline then parameters can be set right when a test definition class is instantiated.

The first parameter is always name which sets the name of the test. The other parameters are usually

specified using keyword arguments.

For example,

1 | with Scenario("My test", description="This is a description of an inline test"): |

Test Arguments

args

The args parameter is used to set the arguments of the test.

✋ Do not confuse the

argsparameter with the Args decorator. See the Args decorator for more details.

1 |

|

If test arguments are passed during the test call, then they will overwrite the args.

For example,

1 |

|

Args

The Args class can be used as a decorator to set any parameters of the test. This is especially useful when there is no dedicated decorator available for the parameter.

Each test parameter can be specified using a corresponding keyword argument.

1 |

For example, the Name decorator can be used to set the name parameter of the test, however, the same can be done using the Args decorator.

1 |

|

It can also be used to specify default values of test arguments by setting the args parameter

to a dictionary where the key is the argument name and the value is the argument’s value.

1 |

|

Naming Tests

You can set the name of any test either by setting the name parameter of the inline test

or using the Name decorator if the test is defined as a decorated function.

The name of the test can be accessed using the name attribute of the test.

name

The name parameter of the test can be use used to set the name of any inline test. The name parameter

must be passed a str which will define the name of the test.

✋ For all test definition classes the first parameter is always the name.

For example,

1 | with Test("My test") as test: |

Name

A Name decorator can be used to set the name of any test that is defined using a decorated function.

✋ The name of test defined using a decorated function is set to the name of the function if the Name decorator is not used.

For example,

1 |

|

or if the Name decorator is not used

✋ Note that any underscores will be replaced with spaces in the name of the test.

1 |

|

Test Flags

You can set the Test Flags of any test either by setting the flags parameter of the inline test

or using the Flags decorator if the test is defined as a decorated function.

The flags of the test can be accessed using the flags attribute of the test.

flags

The flags parameter of the test can be use used to set the flags of any inline test. The flags parameter

must be passed valid flag or multiple flags combined with binary OR opertor.

For example,

1 | with Test("My test", flags=TE) as test: |

Flags

A Flags decorator can be used to set the flags of any test that is defined using a decorated function.

For example,

1 |

|

Test Tags

You can add tags to any test either by setting tags parameter of the inline test

or using Tags decorator if the test is defined as a decorated function. The values of the tags can be accessed

using the tags attribute of the test.

tags

The tags parameter of the test can be used to set tags of any inline test. The tags parameter

can be passed either a list, tuple or a set of tag values. For example,

1 | with Test("My test", tags=("tagA", "tagB")) as test: |

Tags

A Tags decorator can be used to set tags of any test that is defined used a decorated function. For example,

1 |

|

Test Attributes

You can add attributes to any test either by setting attributes parameter of the inline test

or by using Attributes decorator if the test is defined as a decorated function. The values of the attributes can be accessed

using the attributes attribute of the test.

attributes

The attributes parameter of the test can be used to set attributes of any inline test. The attributes parameter

can be passed either a list of (name, value) tuples or Attribute class instances. For example,

1 | with Test("My test", attributes=[("attr0", "value"), Attribute("attr1", "value")] as test: |

Attributes

An Attributes decorator can be used to set attributes of any test that is defined used a decorated function. For example,

1 |

|

Test Requirements

You can add requirements to any test either by setting requirements parameter of the inline test

or by using Requirements decorator if the test is defined as a decorated function. The values of the requirements can be accessed

using the requirements attribute of the test.

✋

Requirementclass instances must always be called with the version number the test is expected to verify.RequirementErrorexception will be raised if version does not match the version of the instance.

requirements

The requirements parameter of the test can be used to set requirements of any inline test. The requirements parameter

must be passed a list of called Requirement instances. of the inline test

or using Requirements decorator if the test is defined as a decorated function. The values of the requirements can be accessed

using the requirements attribute of the test.

For example,

1 |

|

Requirements

A Requirements decorator can be used to set requirements attribute of any test that is defined using a decorated function.

The decorator must be called with one or more called Requirement instances. For example,

1 | RQ1 = Requirement("RQ1", version="1.0") |

Test Specifications

You can add specifications to higher level tests either by setting specifications parameter of the inline test

or using Specifications decorator if the test is defined as a decorated function. The values of the specifications can be accessed

using the specifications attribute of the test.

✋ Specification class instances may be called with the version number the test is expected to verify.

SpecificationErrorexception will be raised if the version does not match the version of the instance.

specifications

The specifications parameter of the test can be used to set specifications of any inline test. The specifications parameter

must be passed a list of Specification class object instances for the inline tests

or using Specifications decorator if the test is defined as a decorated function. The values of the specifications can be accessed

using the specifications attribute of the test.

For example,

1 | from requirements import SRS001 |

Specifications

A Specifications decorator can be used to set specifications attribute of a higher level test that is defined using a decorated function.

The decorator must be called with one or more Specification class object instances. For example,

1 | from requirements import SRS001 |

Test Examples

You can add examples to any test by setting examples parameter of the inline test

or using Examples decorator if the test is defined as a decorated function. The examples can be accessed

using the examples attribute of the test.

examples

The examples parameter of the test can be used to set examples of any inline test. The examples parameter

must be passed a table of examples, which can be defined using Examples class for an inline test

or using the same Examples class as a decorator if the test is defined as a decorated function.

The rows of the examples table can be accessed

using the examples attribute of the test.

✋ Usually, examples are used only with test outlines. Please see Outline for more details.

For example,

1 | with Test("My test", examples=Examples("col0 col1", [("col0_row0", "col1_row0"), ("col0_row1", "col1_row1")])) as test: |

Examples

An Examples decorator can be used to set examples attribute of any test that is defined using a decorated function

or used as an argument of the examples parameter for the test.

The Examples class defines a table of examples and should be passed a header and a list for the rows.

✋ Usually, examples are used only with test outlines. Please see Outline for more details.

For example,

1 |

|

Test XFails

You can specify test results to be crossed out, known as xfails for any test

either by setting xfails parameter of the inline test or by using XFails decorator

if the test is defined as a decorated function. See Crossing Out Results

for more information.

xfails

The xfails parameter of the test can be used to set xfails of any inline test. The xfails parameter

must be passed a dictionary of the form

1 | { |

where key pattern is a test pattern that matches one or more tests for which one

or more results can be crossed out that are specified by the list.

A list must contain one or more (result, "reason"[, when][, result_message]) tuples where

result shall be the result that you want to cross out, for example Fail,

the reason shall be a string that specifies a reason why this result is being

crossed out. You can also specify an optional when condition that shall be a function that

takes a current test object as the first and only argument and shall either return

True or False. The cross-out will only be applied if the when function returns True.

For a fine-grained control over which test results should be crossed out,

you can also specify result_message to select only results with a specific message.

It shall be a regex expression that will be used to match the result message

with DOTALL|MULTILINE flags set during matching.

If result_message is specified, then a test result will only be crossed out if a match is found.

✋ A reason for a crossed out result can be a URL such as for an issue in an issue tracker.

For example,

1 | with Suite("My test", xfails={"my_test": [(Fail, "needs to be investigated")]}): |

or

1 | Suite(run=my_suite, xfails={"my test": [Fail, "https://my.issue.tracker.com/issue34567"]}) |

✋ If the test

patternis not absolute, then it is anchored to the test where xfails is specified.

XFails

The XFails decorator can be used to set xfails attribute of any test that is defined using a decorated function

or used as an extra argument when defining a row for the examples of the test.

The XFails decorator takes a dictionary of the same form as the xfails parameter, where

you can also specify when and result_message arguments.

1 |

|

Test XFlags

You can specify flags to be externally set or cleared for any test by setting xflags parameter or using XFlags decorator

for decorated tests. See Setting or Clearing Flags.

xflags

The xflags parameter of the test can be used to set xflags of a test. The xflags parameter

must be passed a dictionary of the form

1 | { |

where key pattern is a test pattern that matches one or more tests for which

flags will be set or cleared. The flags to be set or cleared are

specified by a tuple of the form (set_flags, clear_flags[, when]) where the

first element specifies flags to be set, and the second element specifies

flags to be cleared. An optional when condition can be specified that shall be a function that

takes a current test object as the first and only argument and shall either return

True or False. If specified, the flags will only be set and cleared if

the when function returns True.

Here is an example to set TE flag and to clear the SKIP flag,

1 | with Suite("My test", xflags={"my_test": (TE, SKIP)}): |

or just set SKIP flag without clearing any other flag

1 | Suite(run=my_suite, xflags={"my test": (SKIP, 0)}) |

and multiple flags can be combined using the binary OR (|) operator.

1 | # clear SKIP and TE flags for "my test" |

✋ If the test

patternis not absolute then it is anchored to the test where xflags is being specified.

XFlags

The XFlags decorator can be used to set xflags attribute of any test that is defined using a decorated function

or used as an extra argument when defining a row for the examples of the test.

The XFlags decorator takes a dictionary of the same form as the xflags parameter.

1 |

|

Test XArgs

You can specify test parameters to be set externally by setting the xargs parameter or using the XArgs decorator for decorated tests. The xargs parameter can be used to set most test parameters externally when the parameter lacks a dedicated method to do it. For example, the xflags should be used instead of the xargs to externally set the flags of the test.

The following parameters can’t be set:

xargs

The xargs parameter of the test can be used to set most other parameters of the test. The xargs parameter must be passed a dictionary of the form

1 | { |

where key pattern is a test pattern that matches one or more tests for which

parameters will be set. An optional when condition can be specified that shall be a function that

takes a current test object as the first and only argument and shall either return

True or False. If specified, the parameters will only be set if

the when function returns True.

Here is an example of setting the TE flag,

1 | with Suite("My test", xargs={"my_test": ({"flags": TE},)}): |

but note that it is recommended to use xflags to set flags externally.

✋ If the test

patternis not absolute, then it is anchored to the test where the xargs is being specified.

XArgs

The XArgs decorator can be used to set the xargs attribute of any test that is defined using a decorated function

or used as an extra argument when defining a row for the examples of the test.

The XArgs decorator takes a dictionary of the same form as the xargs parameter. For example,

1 |

|

Test FFails

You can force the result, including Fail result, of any test by setting

ffails parameter or using FFails decorator

for decorated tests. See Forcing Results.

ffails

The ffails parameter of the test can be used to force any result of a test, including Fail while skipping the execution of its test body. The ffails parameter must be passed a dictionary of the form

1 | { |

where key pattern is a test pattern that matches one or more tests for which

the result will be set by force, and the body of the test will not be executed.

The forced result is specified by a two-tuple of the form (Result, reason) where the

first element specifies the force test result, such as Fail, and the second element specifies

the reason for forcing the result as a string.

For example,

1 | with Suite("My test", ffails={"my_test": (Fail, "test gets stuck")}): |

or

1 | Suite(run=my_suite, ffails={"my test": (Skip, "not supported")}) |

✋ If the test

patternis not absolute then it is anchored to the test where ffails is being specified.

FFails

The FFails decorator can be used to set ffails attribute of any test that is defined using a decorated function

or used as an extra argument when defining a row for the examples of the test.

The FFails decorator takes a dictionary of the same form as the ffails parameter.

1 |

|

The optional when function can also be specified.

1 | def version(*versions): |

Test Timeouts

You can set test timeouts using the timeouts parameter.

Timeouts

The Timeouts object can be either used as the value of the timeouts parameter or as a decorator that can be applied to any decorated test.

The Timeouts takes as an argument a list of objects that specify a timeout, either using the Timeout object directly or as a tuple of arguments that will be passed to it.

1 | [ |

The Timeouts decorator should be used when you want to specify more than one timeout.

✋ Even though more than one timeout can be specified, only the value with the smallest value will be the effective timeout.

1 |

|

For a single timeout, the Timeout decorator can be used instead.

Timeout

The Timeout object can be either used as one of the values in the list passed to the timeouts parameter or as a decorator that can be applied to any decorated test.

1 | Timeout(timeout, message=None, started=None, name=None) |

where

timeouttimeout in secmessagecustom timeout error message, default:Nonestartedstart time, default:None(set to the current test’s start time)namename, default:None

For example,

1 | with Test("my test", timeouts=[Timeout(10)]): |

or it can be used as a decorator as follows:

1 |

|

The when Condition

Some parameters support a when condition, specified as a function, as the last element.

If present, the when function is called before test execution.

The boolean result returned by the when function determines if the forced result is applied,

if the function returns True, or not, if it returns False.

The when function must take one argument, which is the instance of the test.

✋ The optional

whenfunction can define any logic that is needed to determine if some condition is met. Any callable that takes a current test object as the first and only argument that can be used.

Here is an example of using ffails with a when condition:

1 | def version(*versions): |

Specialized keywords

when writing your test scenarios, the framework encourages the usage of specialized keywords because they can provide the much-needed context for your steps.

The specialized keywords map to core Step, Test, Suite, and Module test definition classes as follows:

- Module is defined as a Module

- Suite is defined as a Feature

- Test is defined as a Scenario

- Step is defined as one of the following:

- Given is used define a step for precondition or setup

- Background is used define a step for a complex precondition or setup

- When is used to define a step for an action

- And is used as a continuation of the previous step

- By is used to define a sub-step

- Then is used to define a step for positive assertion

- But is used to define a step for negative assertion

- Finally is used to define a cleanup step

Semi-Automated and Manual Tests

Tests can be semi-automated and include one or more manual steps, or be fully manual.

✋ It is often common to use input() function to prompt for input during execution of semi-automated or manual tests. See Reading Input.

Semi-Automated Tests

Semi-automated tests are tests that have one or more steps with the MANUAL flag set.

✋ MANUAL test flag is propagated down to all sub-tests.

For example,

1 | from testflows.core import * |

When a semi-automated test is run, the test program pauses and asks for input for each manual step.

1 | Sep 06,2021 18:39:00 ⟥ Scenario my mixed scenario |

Manual Tests

A manual test is just a test that has MANUAL flag set at the test level. Any sub-tests, such as steps, inherit MANUAL flag from the parent test.

✋ Manual tests are best executed using manual output format.

For example,

1 | from testflows.core import * |

When a manual test is run, the test program pauses for each test step as well as to get the result of the test itself.

1 | Sep 06,2021 18:44:30 ⟥ Scenario manual scenario, flags:MANUAL |

Manual With Automated Steps

A test that has MANUAL flag could also include some automated steps, which can be marked as automated using AUTO flag.

For example,

1 | from testflows.core import * |

When the above example is executed, it will produce the following output that shows that the

result for /manual scenario/automated action was set automatically

based on the automated actions performed in this step.

1 | Oct 31,2021 18:24:53 ⟥ Scenario manual scenario, flags:MANUAL |

Test Definition Classes

Module

A Module can be defined using Module test definition class or TestModule decorator.

1 |

|

or inline as

1 | with Module("module"): |

Suite

A Suite can be defined using Suite test definition class or TestSuite decorator.

1 |

|

or inline as

1 | with Suite("My suite"): |

Feature

A Feature can be defined using Feature test definition class or TestFeature decorator.

1 |

|

or inline as

1 | with Feature("My feature"): |

Test

A Case can be defined using Test test definition class or TestCase decorator.

1 |

|

or inline as

1 | with Test("My testcase"): |

✋ Note that here the word

testis used to define a Case to match the most common meaning of the wordtest. When someone says they will run atestthey most likely mean they will run a test Case.

Scenario

A Scenario can be defined using Scenario test definition class or TestScenario decorator.

1 |

|

or inline as

1 | with Scenario("My scenario"): |

Check

A Check can be defined using Check test definition class or TestCheck decorator

1 |

|

or inline as

1 | with Check("My check"): |

and is usually used inside either Test or Scenario to define an inline sub-test

1 | with Scenario("My scenario"): |

Critical, Major, Minor

A Critical, Major, or Minor checks can be defined using Critical, Major or Minor test definition class, respectively, or similarly using TestCritical, TestMajor, TestMinor decorators

1 |

|

or inline as

1 | with Critical("My critical check"): |

and are usually used inside either Test or Scenario to define inline sub-tests.

1 | with Scenario("My scenario"): |

These classes are usually used for the classification of checks during reporting.

1 | 1 scenario (1 ok) |

Example

An Example can only be defined inline using Example test definition class. There is no decorator to define it outside of existing test. An Example is of a Test Type and is used to define one or more sub-tests. Usually, Examples are created automatically using Outlines.

1 | with Scenario("My scenario"): |

Outline

An Outline can be defined using Outline test definition class or TestOutline decorator. An Outline is a sub-type of a Test type but can you can change the type by passing it another Type or a Sub-Type such as Scenario or Suite etc.

However, because Outlines are meant to be called from other tests or used with Examples it is best to define an Outline using TestOutline decorator as follows.

1 | from testflows.core import * |

When Examples are defined for the Outline and an outline is called with no arguments from a test that is of a higher Type than the Type of outline itself, then when called, the outline will iterate over all the examples defined in the Examples table. For example,if you run the example above that executes the outline with no arguments, you will see that the outline iterates over all the examples in the Examples table, where each example, a row in the examples table, defines the values of the arguments for the outline.

1 | Jul 05,2020 18:16:34 ⟥ Scenario outline |

If we run the same outline with arguments, then the outline will not use the Examples but instead will use the argument values that were provided to the outline. For example,

1 | with Scenario("My scenario"): |

will produce the following output.

1 | Jul 05,2020 18:23:02 ⟥ Scenario My scenario |

Combination

A Combination is not meant to be used explicitly, and in most cases it is only used internally to represent each combination of a Sketch.

Sketch

A Sketch is defined using the TestSketch decorator. In most cases you should not use Sketch test definition class directly as it will not execute its Combinations. A Sketch is a sub-type of a type Test but you can specify a different type. For example, you can pass a specific Type or a Sub-Type such as Scenario, Suite, or Feature etc. when defining a TestSketch.

Because Sketchs are designed to execute different Combinations, one for each Combination defined by the either() function, it is best to define a Sketch only using a TestSketch decorator.

✋ TestSketch is designed to work with either() function that is used to define combination variables and their possible values.

For example,

1 | def add(a, b): |

The TestSketch above calls the add() function with different combinations of its a and b parameters.

The Sketch checks combinations when a argument is either 1 or 2, and b argument is either 2 or 3.

Therefore, the following combination patterns are covered:

pattern #0: add(1,2)pattern #1: add(1,3)pattern #2: add(2,2)pattern #3: add(2,3)

You can see this from the output of the test.